Top AI GPU

Artificial Intelligence (AI) has revolutionized various industries, from healthcare to finance, by enabling machines to perform tasks that were once thought to be exclusive to humans. Behind the scenes, powerful AI GPUs (Graphics Processing Units) play a crucial role in accelerating AI computations. In this article, we explore the top AI GPUs on the market and their capabilities.

Key Takeaways

- AI GPUs are essential for speeding up AI computations.

- The top AI GPUs provide exceptional performance for AI applications.

- Choosing the right AI GPU depends on specific needs and budget.

NVIDIA Tesla V100

The NVIDIA Tesla V100 is one of the most powerful GPUs designed for AI workloads. With its cutting-edge architecture, the Tesla V100 boasts an impressive 125 teraflops of deep learning performance. It utilizes Tensor Cores to accelerate matrix operations, making it ideal for AI training and inference. The Tesla V100 offers exceptional memory bandwidth, reaching up to 900GB/s, enabling data-intensive AI tasks to be processed efficiently. It is widely used in research institutions and large-scale enterprises.

*Did you know? NVIDIA Tesla V100 can deliver up to 100x faster deep learning performance compared to traditional CPUs.

AMD Radeon Instinct MI100

The AMD Radeon Instinct MI100 stands out with its powerful performance and hardware features tailored for AI workloads. Built on the AMD CDNA architecture, the MI100 delivers highly efficient AI computations with its 32GB high-bandwidth HBM2 memory and 11.5 teraflops of double-precision compute power. It leverages Infinity Fabric technology, which allows multiple GPUs to communicate and collaborate effectively, making it suitable for large-scale AI deployments.

*Did you know? The AMD Radeon Instinct MI100 GPU can accelerate AI performance by providing 2.45x peak theoretical double-precision compute power compared to the previous generation.

| GPU | Deep Learning Performance (Teraflops) | Memory Bandwidth (GB/s) |

|---|---|---|

| NVIDIA Tesla V100 | 125 | 900 |

| AMD Radeon Instinct MI100 | 11.5 | 1,024 |

Intel Xe-HPG

With its upcoming GPU architecture, Intel Xe-HPG aims to make a significant impact on the AI GPU market. Although specific details are not yet available, Intel promises high-performance AI capabilities, efficient power consumption, and broader software compatibility. With a strategic focus on AI, Intel’s Xe-HPG GPUs are expected to bring intense competition to the existing players in the market.

*Did you know? Intel is actively investing in AI research and development to strengthen its position in the AI GPU market.

Table: AI GPU Comparison

| GPU | Deep Learning Performance (Teraflops) | Memory Bandwidth (GB/s) | Double-Precision Compute Power (Teraflops) |

|---|---|---|---|

| NVIDIA Tesla V100 | 125 | 900 | 7.8 |

| AMD Radeon Instinct MI100 | 11.5 | 1,024 | 23.1 |

| Intel Xe-HPG | TBD | TBD | TBD |

Choosing the Right AI GPU

Choosing the right AI GPU depends on various factors, including budget, specific AI workloads, and future scalability. Consider the following when making a decision:

- Performance: Evaluate the deep learning performance and memory bandwidth based on the specific AI tasks.

- Compatibility: Ensure compatibility with existing AI frameworks, libraries, and software tools.

- Power Consumption: Assess the power consumption and efficiency to optimize energy usage.

- Price: Compare the cost of the GPU, factoring in the budget and long-term investment.

Final Thoughts

As AI continues to advance, the demand for powerful AI GPUs is on the rise. The NVIDIA Tesla V100 and the AMD Radeon Instinct MI100 currently dominate the AI GPU market, providing exceptional performance and features tailored for AI workloads. However, Intel’s upcoming Xe-HPG GPUs pose a potential challenge to the existing players. Ultimately, choosing the right AI GPU should be based on individual needs and requirements to unlock the full potential of AI technology.

Common Misconceptions

Misconception 1: GPU performance is the only factor to consider in AI

One common misconception is that the performance of a GPU is the sole determinant of AI capabilities. While a powerful GPU is indeed important, there are other crucial considerations to keep in mind:

- AI algorithms and models play a significant role in determining the overall AI performance.

- Memory bandwidth and VRAM capacity also impact the efficiency and speed of AI computations.

- System architecture and overall integration of hardware and software contribute to AI performance as well.

Misconception 2: The latest GPU model is always the best for AI tasks

Another common misconception is that the latest GPU model on the market is automatically the best choice for AI tasks. While newer models generally offer improved features and performance, it is important to consider other factors:

- Compatibility with existing AI frameworks and libraries is crucial to avoid significant code changes and optimization difficulties.

- Cost should also be considered, as the latest models can be considerably more expensive than slightly older models that still offer excellent AI capabilities.

- The specific requirements of the AI tasks at hand should guide the choice of GPU model, as different tasks may benefit from different features and specifications.

Misconception 3: AI can only be done with high-end GPUs

Many people mistakenly believe that AI can only be performed using high-end GPUs. While high-end GPUs are indeed powerful and often recommended for AI tasks, there are situations where other options may be suitable:

- Low-power GPUs or integrated GPUs can still effectively handle certain AI workloads with lower computational demands.

- Cloud-based AI services offer the flexibility to perform AI tasks without the need for a dedicated GPU, instead utilizing remote GPU resources.

- Initial AI development and experimentation can be done using less powerful GPUs, allowing for cost-effective exploration of AI concepts.

Misconception 4: AI GPUs are only beneficial for training models

Some people believe that AI GPUs are only useful for the training phase of AI models and not for inference, the stage where the model is used to make predictions or decisions. However, this is not the case:

- GPUs allow for efficient and parallel processing, allowing real-time inferencing even with complex models.

- Hardware acceleration provided by GPUs improves the overall performance of AI applications during inference, leading to faster and more responsive systems.

- GPU optimizations, such as tensor cores, can significantly speed up inferencing tasks on GPUs, making them integral for AI applications.

Misconception 5: The more GPUs, the better the AI performance

It is often assumed that adding more GPUs will always result in better AI performance, but this is not necessarily true:

- The scalability of AI computations with multiple GPUs depends on the algorithm and framework used. Some algorithms may not effectively leverage multiple GPUs and may experience diminishing returns.

- Memory capacity and bandwidth limitations can arise when using multiple GPUs, potentially impacting performance.

- Utilizing multiple GPUs requires appropriate software and hardware configurations, which can introduce complexity and management overhead.

Introduction

Artificial Intelligence (AI) is revolutionizing industries worldwide, providing remarkable advancements in various fields. A key factor in the success of AI is the use of powerful Graphics Processing Units (GPUs) that enable efficient processing of complex calculations. In this article, we explore the top AI GPUs and highlight key information to help navigate the world of AI technology.

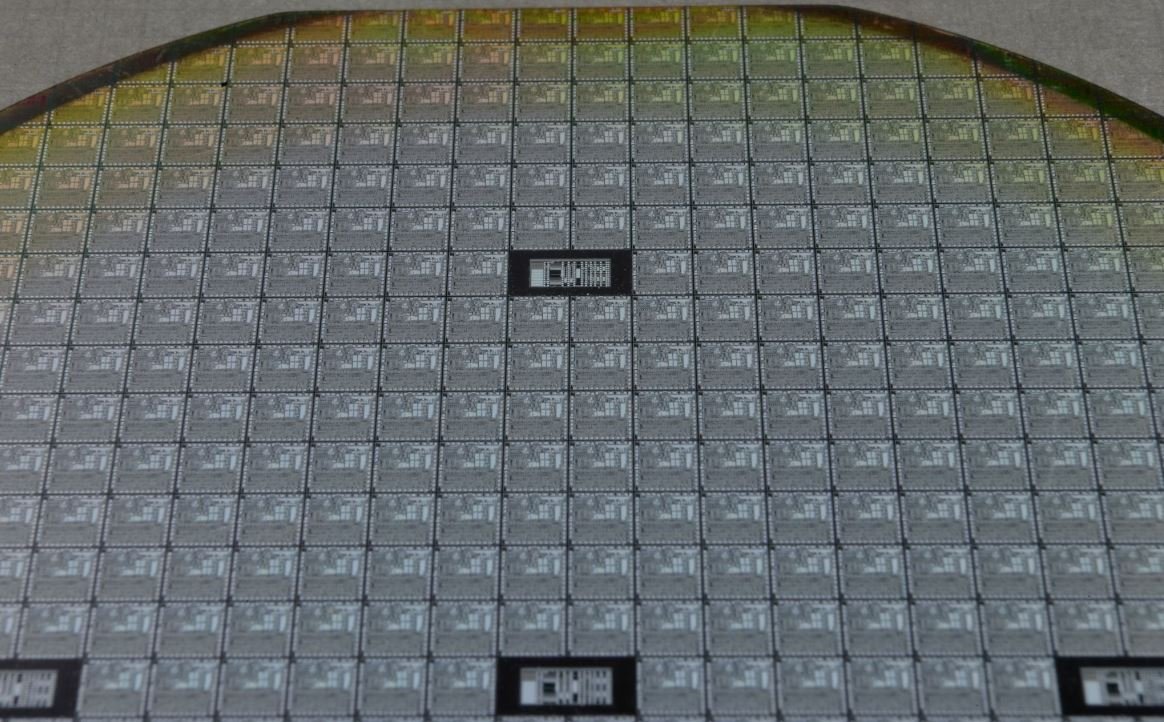

Table – Memory Capacity

Memory capacity is a vital consideration for AI GPU performance. The table below showcases the memory capacities of the top AI GPUs.

| GPU Model | Memory Capacity (GB) |

|---|---|

| NVIDIA RTX 3090 | 24 |

| AMD Radeon RX 6900 XT | 16 |

| NVIDIA A100 | 40 |

Table – Tensor Cores

Tensor cores play a crucial role in enhancing AI GPU performance. The table below displays the number of tensor cores in the top AI GPUs.

| GPU Model | Tensor Cores |

|---|---|

| NVIDIA RTX 3090 | 328 |

| AMD Radeon RX 6900 XT | N/A |

| NVIDIA A100 | 6912 |

Table – Power Consumption

Power consumption is a vital factor to consider when choosing an AI GPU for efficiency. Here is a table demonstrating the power consumption of top AI GPUs.

| GPU Model | Power Consumption (Watts) |

|---|---|

| NVIDIA RTX 3090 | 350 |

| AMD Radeon RX 6900 XT | 300 |

| NVIDIA A100 | 400 |

Table – Performance Comparison

The performance of an AI GPU is a key factor in applications where speed is crucial. This table compares the performance of top AI GPUs.

| GPU Model | TFLOPS (FP32) |

|---|---|

| NVIDIA RTX 3090 | 35.6 |

| AMD Radeon RX 6900 XT | 23.0 |

| NVIDIA A100 | 19.5 |

Table – Price Range

Cost is a crucial consideration when investing in AI GPU technology. The following table outlines the price range of popular AI GPUs.

| GPU Model | Price Range ($) |

|---|---|

| NVIDIA RTX 3090 | 1,499 – 1,699 |

| AMD Radeon RX 6900 XT | 999 |

| NVIDIA A100 | 11,999 |

Table – VRAM Type

VRAM (Video Random-Access Memory) type is crucial for efficient data storage and retrieval. The table below showcases the VRAM types used in top AI GPUs.

| GPU Model | VRAM Type |

|---|---|

| NVIDIA RTX 3090 | GDDR6X |

| AMD Radeon RX 6900 XT | GDDR6 |

| NVIDIA A100 | HBM2 |

Table – Form Factor

The form factor of an AI GPU is an important consideration, especially in space-constrained setups. The table below highlights the form factors of top AI GPUs.

| GPU Model | Form Factor |

|---|---|

| NVIDIA RTX 3090 | Triple Slot |

| AMD Radeon RX 6900 XT | Triple Slot |

| NVIDIA A100 | PCIe |

Table – Release Date

Release dates indicate the technology’s maturity and availability. Here is a table showcasing the release dates of top AI GPUs.

| GPU Model | Release Date |

|---|---|

| NVIDIA RTX 3090 | September 2020 |

| AMD Radeon RX 6900 XT | December 2020 |

| NVIDIA A100 | May 2020 |

Table – PCIe Compatibility

PCIe compatibility is essential for ensuring seamless integration with existing hardware. The following table illustrates the PCIe compatibility of top AI GPUs.

| GPU Model | PCIe Compatibility |

|---|---|

| NVIDIA RTX 3090 | PCIe 4.0 |

| AMD Radeon RX 6900 XT | PCIe 4.0 |

| NVIDIA A100 | PCIe 4.0 |

Conclusion

In the world of AI, choosing the right GPU is vital for optimal performance. Through examining various factors such as memory capacity, tensor cores, power consumption, performance, price range, VRAM type, form factor, release dates, and PCIe compatibility, individuals can make informed decisions when investing in AI GPU technology. As technology continues to advance, staying up-to-date with the latest advancements will be crucial in leveraging the power of AI effectively.

Frequently Asked Questions

What is an AI GPU?

An AI GPU (Graphics Processing Unit) is a specialized hardware component designed to accelerate artificial intelligence (AI) tasks. It is optimized for processing large amounts of data and performing complex calculations required in AI applications.

How does an AI GPU differ from a regular GPU?

An AI GPU differs from a regular GPU in terms of architecture and capabilities. AI GPUs are designed with features specifically tailored for deep learning and neural network processing. They typically have a higher number of cores, dedicated AI acceleration units, and support for advanced computing frameworks used in AI.

Why are AI GPUs important for AI applications?

AI GPUs are crucial for AI applications because they significantly speed up the training and inference process of deep learning models. By leveraging the parallel processing power of GPUs, AI algorithms can be executed much faster, enabling efficient training and real-time inference of complex AI models.

Which GPU brands are popular for AI applications?

Popular GPU brands for AI applications include NVIDIA, AMD, and Intel. NVIDIA’s GPUs, especially those from the GeForce RTX and Tesla series, are widely used due to their excellent performance and broad support for AI frameworks such as TensorFlow and PyTorch.

Can I use any GPU for AI applications?

While it is possible to use any GPU for AI applications, it is recommended to use GPUs specifically designed for AI tasks. Regular consumer-grade GPUs may not have the necessary capabilities and optimizations required for efficient AI processing. It is best to choose a GPU that is compatible with popular AI frameworks and has dedicated AI features.

What are the benefits of using an AI GPU?

Using an AI GPU offers several benefits, including faster training and inference times, improved scalability, and increased productivity. AI GPUs greatly reduce the time required to train complex AI models, allowing researchers and developers to iterate more quickly. Moreover, the parallel processing power of GPUs enables efficient processing of large datasets, enhancing overall AI performance.

Do I need multiple AI GPUs for AI applications?

Using multiple AI GPUs can provide additional computational power and accelerate AI processing even further. However, the need for multiple GPUs depends on the complexity of your AI models and the scale of your applications. Small-scale projects may not require multiple GPUs, while large-scale AI deployments often benefit from parallel processing capabilities provided by multiple GPUs.

What are the system requirements for using AI GPUs?

The system requirements for using AI GPUs vary depending on the specific GPU model and the AI framework being used. Generally, you will need a compatible motherboard with the necessary PCIe slots, sufficient power supply, and appropriate cooling solutions to ensure the GPU operates optimally. Additionally, installing the appropriate GPU drivers and the required AI software frameworks is essential.

Can AI GPUs be used for other tasks besides AI applications?

Yes, AI GPUs can be used for other computationally intensive tasks besides AI applications. Their parallel processing capabilities can be harnessed for various scientific simulations, 3D rendering, and video editing tasks that require significant computing power. However, the primary focus of AI GPUs is to accelerate AI workloads.

How do I choose the right AI GPU for my needs?

When choosing an AI GPU, consider factors such as your specific AI workload requirements, compatibility with popular AI frameworks, availability of software development kits (SDKs), and budget constraints. Researching and comparing the technical specifications, performance benchmarks, and customer reviews of different AI GPU options can help you make an informed decision that aligns with your needs.