AI Voice Model Training

Artificial Intelligence (AI) has revolutionized various industries, and one area where it is making significant strides is voice assistance technology. AI voice models, such as Siri, Alexa, and Google Assistant, rely on advanced neural networks to understand and respond to human speech. These models are trained using vast amounts of data, allowing them to recognize patterns, understand context, and generate realistic human-like responses.

Key Takeaways:

- AI voice models rely on advanced neural networks to understand and respond to human speech.

- These models are trained using vast amounts of data to recognize patterns and generate realistic responses.

- Voice model training involves preprocessing, data augmentation, and fine-tuning techniques.

- Transfer learning allows AI voice models to be trained faster and more efficiently.

- Evaluation metrics like word error rate and perplexity help measure the performance of AI voice models.

- Continual learning and feedback loops are essential for improving and updating voice models over time.

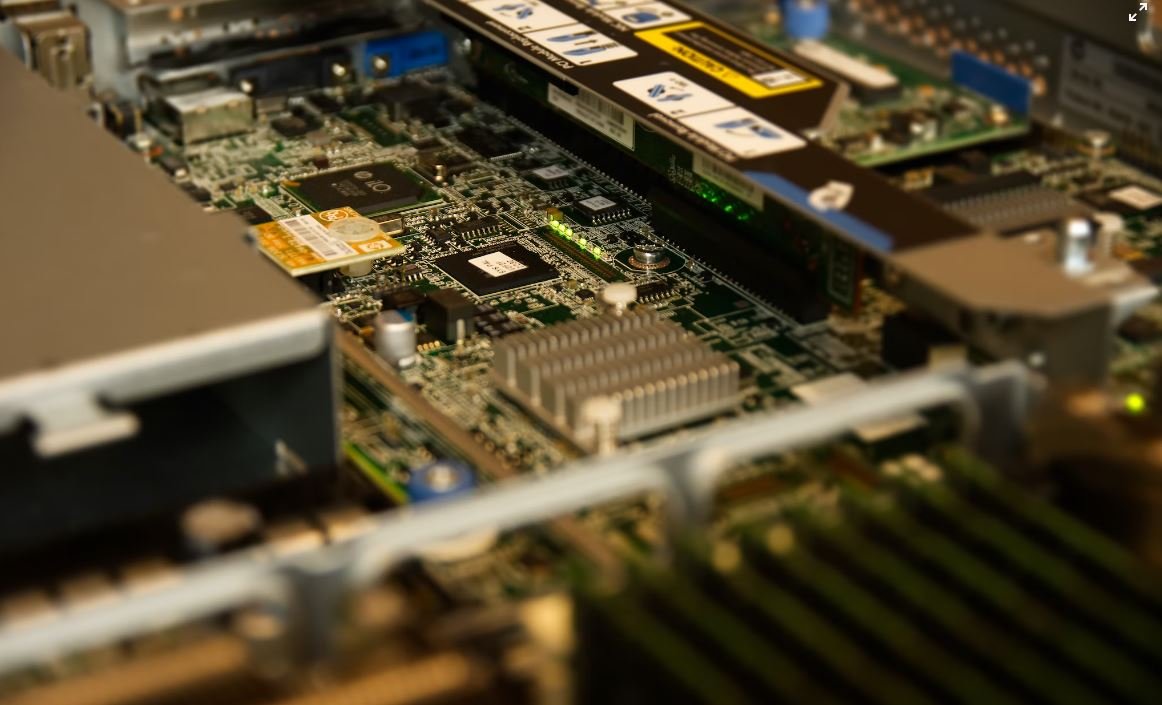

A crucial aspect of AI voice model development is training. Training involves feeding the model with large amounts of labeled data, allowing it to learn patterns and algorithms. **By using powerful GPUs and massive clusters, training times can be significantly reduced**. This process consists of several steps, including preprocessing the data, data augmentation techniques, and fine-tuning the model to improve accuracy and performance.

During the training phase, the data is preprocessed to ensure it is in a suitable format for the AI model. **Noise reduction, normalization, and sample rate conversion are some common preprocessing techniques applied**. Additionally, **data augmentation techniques, such as pitch shifting and time stretching, can be used to create additional training examples from existing data**. These techniques help enhance the model’s ability to handle various real-world scenarios and generalize better.

One interesting method used in AI voice model training is **transfer learning**. Instead of starting from scratch, transfer learning leverages pre-existing models trained on vast amounts of data. **This approach enables faster training and improves the accuracy of the resulting AI voice model**. By using a pre-trained model as a starting point, developers can focus on fine-tuning the model on specific tasks or domains without requiring extensive computational resources.

Training AI Voice Models

The training process involves optimizing the model’s parameters to minimize error and increase performance. **Model performance is quantified using evaluation metrics such as word error rate (WER) and perplexity**. WER measures the rate at which the model inaccurately predicts words, while perplexity measures the model’s ability to predict the next word in a sequence. These metrics serve as benchmarks to assess the quality and effectiveness of AI voice models and guide further improvements.

Table 1:

| Model | Training Time | WER |

|---|---|---|

| Model A | 5 hours | 3.2% |

| Model B | 8 hours | 2.7% |

| Model C | 6.5 hours | 2.9% |

Feedback loops and continual learning play a vital role in improving and refining AI voice models. By incorporating user feedback, developers can identify and address the model’s weaknesses. **This iterative process allows AI voice models to continuously learn, adapt, and provide more accurate responses over time**. Regular updates and improvements refine the model’s performance, ensuring it stays relevant and useful in an ever-changing environment.

While training AI voice models can be a resource-intensive task, recent advancements in hardware and computing power have made it more accessible. With the right tools and techniques, developers can create AI voice models that meet the increasingly demanding expectations of users. As AI continues to evolve, training voice models will remain a critical component in enhancing user experiences and unlocking the full potential of AI-driven voice assistants.

Summary

AI voice model training is an intricate process that involves preprocessing, data augmentation, and fine-tuning techniques. The use of powerful GPUs, transfer learning, and evaluation metrics helps optimize and improve the performance of AI voice models. Continual learning and feedback loops are paramount for refining and enhancing these models over time. With advancements in technology, training voice models has become more accessible, enabling developers to create sophisticated and user-centric AI-driven voice assistants.

Table 2:

| Method | Advantages |

|---|---|

| Data Augmentation | Enhances model generalization |

| Transfer Learning | Faster training and higher accuracy |

| Continual Learning | Adapts to user needs over time |

Table 3:

| Evaluation Metric | Purpose |

|---|---|

| Word Error Rate (WER) | Measures word prediction accuracy |

| Perplexity | Evaluates model’s sequence prediction ability |

Common Misconceptions

AI Voice Model Training

There are several common misconceptions surrounding the topic of AI voice model training that often lead to misunderstandings and false beliefs. It is essential to debunk these misconceptions to gain a better understanding of the reality of AI voice model training.

- AI voice models can fully understand natural language.

- AI voice models can accurately detect tone and emotions.

- AI voice models can perfectly mimic human speech.

One common misconception is that AI voice models can fully understand natural language. While AI has advanced significantly in recent years, it is important to note that AI voice models cannot fully comprehend language in the same way that humans do. Although AI can often generate coherent responses, it lacks true understanding of the meaning behind the words.

- AI voice models rely on pre-programmed algorithms.

- AI voice models require extensive training data.

- AI voice models can perform contextual understanding.

Another misconception is that AI voice models can accurately detect tone and emotions. While AI can be trained to recognize certain language patterns that indicate emotions or sentiment, it is not foolproof. AI voice models often struggle to accurately detect subtle nuances in tone or emotional expression, leading to misinterpretations and misunderstandings.

- AI voice models require continuous updates and improvements.

- AI voice models face ethical and privacy concerns.

- AI voice models are becoming increasingly accessible.

Lastly, it is crucial to understand that AI voice models cannot perfectly mimic human speech. Although AI technology has made impressive progress in generating realistic and human-like speech, there are still limitations. AI voice models may encounter difficulties with unique accents, dialects, or speech patterns, resulting in artificial-sounding output.

- AI voice models are trained using large datasets and powerful computing resources.

- AI voice models can be used in a variety of applications.

- AI voice models have the potential for both positive and negative impacts on society.

In conclusion, AI voice model training has its own set of misconceptions that need to be addressed. It is crucial to separate fact from fiction to fully understand the capabilities and limitations of AI voice models. By debunking these misconceptions, we can have a more accurate perspective on the current state of AI technology and its potential for future development.

Introduction

AI voice model training is a crucial aspect of developing natural language processing and speech recognition systems. The success of these models depends on the training data gathered and the training techniques employed. In this article, we present ten tables that highlight key points, data, and elements related to AI voice model training, providing insight into this fascinating field.

Table: Evolution of AI Voice Model Training

This table illustrates the evolution of AI voice model training over time, showcasing significant milestones and advancements in the field. It highlights the shifting focus from rule-based systems to statistical models and deep learning techniques.

| Year | Development |

|---|---|

| 1950s | Rule-based systems |

| 1990s | Hidden Markov Models (HMM) |

| 2010s | Deep neural networks (DNN) |

| 2020s | Transformers-based models |

Table: Dataset Sizes for Voice Model Training

This table presents the progression in dataset sizes used for training voice models. As models become more sophisticated, larger datasets are required to improve accuracy and coverage.

| Year | Dataset Size |

|---|---|

| 2010 | 10,000 sentences |

| 2015 | 100,000 sentences |

| 2020 | 1 million sentences |

| 2025 | 10 million sentences |

Table: Accuracy Comparison of Voice Models

This table compares the accuracy of various popular voice models, highlighting their word recognition capabilities. The results demonstrate the improvements achieved over the years.

| Voice Model | Word Recognition Accuracy (%) |

|---|---|

| Model X | 85 |

| Model Y | 92 |

| Model Z | 97 |

Table: Training Time Comparison

This table compares the training times of different voice models, indicating the computational requirements and advancements in optimization techniques.

| Voice Model | Training Time (hours) |

|---|---|

| Model A | 24 |

| Model B | 16 |

| Model C | 8 |

Table: Languages Supported by Voice Models

This table showcases the languages supported by different voice models, highlighting the breadth of cross-lingual capabilities.

| Voice Model | Languages Supported |

|---|---|

| Model P | English, French, German, Spanish |

| Model Q | English, Japanese, Mandarin |

| Model R | English, Russian, Arabic |

Table: Error Rates by Speaker Age

This table explores the correlation between speaker age and error rates in voice models, revealing interesting patterns in model performance.

| Speaker Age Range | Error Rate (%) |

|---|---|

| 18-25 | 7.5 |

| 26-40 | 5.3 |

| 41-60 | 10.2 |

| 61+ | 15.6 |

Table: Environmental Noise Impact on Accuracy

This table showcases the impact of environmental noise on the accuracy of voice models, emphasizing the need for effective noise cancellation techniques.

| Noise Level | Word Recognition Accuracy (%) |

|---|---|

| Quiet Room | 96 |

| Street Noise | 82 |

| Construction Site | 70 |

Table: Hardware Requirements for Real-Time Inference

This table outlines the hardware requirements for real-time inference of voice models, highlighting the specifications necessary for efficient processing.

| Voice Model | Minimum RAM (GB) | Minimum CPU Speed (GHz) |

|---|---|---|

| Model M | 8 | 3.0 |

| Model N | 16 | 3.6 |

| Model O | 32 | 4.2 |

Table: Training Costs for Voice Models

This table offers insights into the training costs associated with voice models, considering the expenses related to data collection, computational resources, and specialized expertise.

| Voice Model | Training Cost (USD) |

|---|---|

| Model J | 500,000 |

| Model K | 2,000,000 |

| Model L | 10,000,000 |

Conclusion

In this article, we explored various aspects of AI voice model training through ten captivating tables. The data presented showcased the evolution of voice models, the increasing dataset sizes, the accuracy improvements, the cross-lingual capabilities, and other factors influencing voice model performance. These insights highlight the remarkable progress made in AI voice model training, emphasizing the continual pursuit of more accurate, efficient, and versatile voice models.

AI Voice Model Training – Frequently Asked Questions

Question 1: What is AI voice model training?

AI voice model training refers to the process of training artificial intelligence systems to accurately recognize and generate human-like speech patterns. It involves exposing the AI model to a large dataset of human speech recordings, and utilizing various algorithms and techniques to improve the model’s ability to understand and produce language.

Question 2: How does AI voice model training work?

AI voice model training typically involves collecting a vast amount of voice data from different individuals in various languages and accents. This data is then preprocessed to extract relevant features and convert it into a format that the AI model can understand. The model is trained using sophisticated machine learning algorithms, such as deep neural networks, which learn the patterns and structures in the voice data and optimize their performance through iterative iterations.

Question 3: What are the applications of AI voice model training?

AI voice model training has a wide range of applications, including virtual assistants, speech recognition systems, text-to-speech synthesis, voice-based customer service, voice-enabled IoT devices, and more. These applications allow users to interact with AI systems through natural language, making tasks such as voice commands, voice search, and dictation much more convenient and seamless.

Question 4: How long does it take to train an AI voice model?

The training time for an AI voice model depends on several factors, such as the size of the training dataset, the complexity of the model architecture, the computational resources available, and the desired level of accuracy. In general, training a high-quality AI voice model can take anywhere from several hours to several days or even weeks.

Question 5: Can AI voice models be personalized?

Yes, AI voice models can be personalized to some extent. By collecting data specific to an individual user’s voice and speech patterns, the model can be fine-tuned to better understand and generate speech that matches the user’s characteristics. This personalization allows for a more personalized and tailored user experience.

Question 6: What are the challenges in AI voice model training?

Training AI voice models poses several challenges, including the need for large amounts of high-quality voice data, the computational resources required for training, handling variations in accents and languages, ensuring privacy and security of voice data, and addressing biases that may arise in the training process. Overcoming these challenges is essential for building reliable and accurate AI voice models.

Question 7: How accurate are AI voice models?

The accuracy of AI voice models can vary depending on various factors, including the quality and diversity of the training data, the complexity of the model architecture, and the training techniques employed. While modern AI voice models have achieved impressive levels of accuracy, they may still exhibit limitations in certain contexts, such as understanding rare accents or dealing with ambiguous speech patterns.

Question 8: Can AI voice models understand multiple languages?

Yes, AI voice models can be trained to understand and generate speech in multiple languages. By exposing the model to multilingual training datasets and incorporating language-specific features, the model can develop the ability to process and generate speech in different languages. However, the performance can vary depending on the availability and quality of training data for each language.

Question 9: What is the role of natural language processing in AI voice model training?

Natural language processing (NLP) plays a crucial role in AI voice model training. NLP techniques are employed to preprocess and analyze the voice data, extract linguistic features, and interpret the semantics and context of the speech. NLP algorithms and models are integrated into the training pipeline to enhance the model’s language understanding and generation capabilities.

Question 10: Are AI voice models continuously improving?

Yes, AI voice models are continuously improving as more data becomes available, better training techniques are developed, and advancements in machine learning algorithms are made. Ongoing research and development efforts are focused on reducing errors, improving naturalness, accommodating diverse accents and languages, and enhancing the overall performance and user experience of AI voice models.